Not So Fast, Classifier – Accuracy and Entropy Reduction in Incremental Intent Classification

Abstract

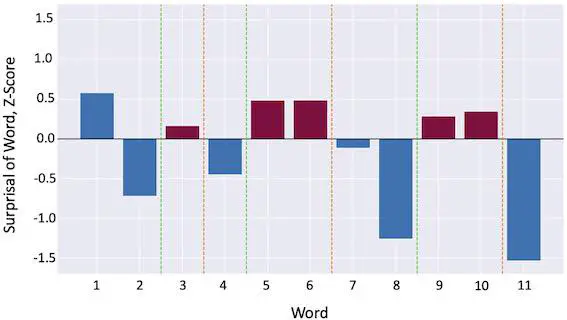

Incremental intent classification requires the assignment of intent labels to partial utterances. However, partial utterances do not necessarily contain enough information to be mapped to the intent class of their complete utterance (correctly and with a certain degree of confidence). Using the final interpretation as the ground truth to measure a classifier’s accuracy during intent classification of partial utterances is thus problematic. We release inCLINC, a dataset of partial and full utterances with human annotations of plausible intent labels for different portions of each utterance, as an upper (human) baseline for incremental intent classification. We analyse the incremental annotations and propose entropy reduction as a measure of human annotators’ convergence on an interpretation (i.e. intent label). We argue that, when the annotators do not converge to one or a few possible interpretations and yet the classifier already identifies the final intent class early on, it is a sign of overfitting that can be ascribed to artefacts in the dataset.